CryptoDB

NTT Multiplication for NTT-unfriendly Rings: New Speed Records for Saber and NTRU on Cortex-M4 and AVX2

| Authors: |

|

|---|---|

| Download: | |

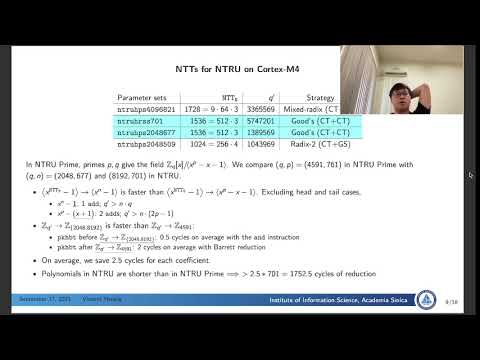

| Abstract: | In this paper, we show how multiplication for polynomial rings used in the NIST PQC finalists Saber and NTRU can be efficiently implemented using the Number-theoretic transform (NTT). We obtain superior performance compared to the previous state of the art implementations using Toom–Cook multiplication on both NIST’s primary software optimization targets AVX2 and Cortex-M4. Interestingly, these two platforms require different approaches: On the Cortex-M4, we use 32-bit NTT-based polynomial multiplication, while on Intel we use two 16-bit NTT-based polynomial multiplications and combine the products using the Chinese Remainder Theorem (CRT).For Saber, the performance gain is particularly pronounced. On Cortex-M4, the Saber NTT-based matrix-vector multiplication is 61% faster than the Toom–Cook multiplication resulting in 22% fewer cycles for Saber encapsulation. For NTRU, the speed-up is less impressive, but still NTT-based multiplication performs better than Toom–Cook for all parameter sets on Cortex-M4. The NTT-based polynomial multiplication for NTRU-HRSS is 10% faster than Toom–Cook which results in a 6% cost reduction for encapsulation. On AVX2, we obtain speed-ups for three out of four NTRU parameter sets.As a further illustration, we also include code for AVX2 and Cortex-M4 for the Chinese Association for Cryptologic Research competition award winner LAC (also a NIST round 2 candidate) which outperforms existing code. |

Video from TCHES 2021

BibTeX

@article{tches-2021-30796,

title={NTT Multiplication for NTT-unfriendly Rings: New Speed Records for Saber and NTRU on Cortex-M4 and AVX2},

journal={IACR Transactions on Cryptographic Hardware and Embedded Systems},

publisher={Ruhr-Universität Bochum},

volume={2021, Issue 2},

pages={159-188},

url={https://tches.iacr.org/index.php/TCHES/article/view/8791},

doi={10.46586/tches.v2021.i2.159-188},

author={Chi-Ming Marvin Chung and Vincent Hwang and Matthias J. Kannwischer and Gregor Seiler and Cheng-Jhih Shih and Bo-Yin Yang},

year=2021

}